Not only the production of synthetic plant sketches, but also the entire area of nonphotorealistic rendering will be continuously investigated during the years to come. The subject has been in discussion since the 1990s, and today the second phase in investigating the problems has been reached, with some notable attempts to systemize the area (see [215]) and to classify the related approaches.

Aside from the purely scientific interest, a starting point for the investigation of such representational forms is the realization that many illustrations in books and other traditional print media are not photographs, but rather abstract drawings, sketches, and illustrations [212]. This might be due to technical reasons such as low printing costs. Beyond the cost factor, illustrations seem to offer a better way for expressing the contents of certain kinds of complex pictures. As an example, let us imagine that a medical anatomy atlas consisted only of photos. It would not be very expressive, because photographs can for the most part only vaguely reflect the internal organs of the body and their relationships to each other. Here drawings serve the intent of such a highly specialized thematic much better, since they can represent the important picture content with a few lines. Strothotte et al. emphasized such advantages of illustrations in various articles [194, 213, 214].

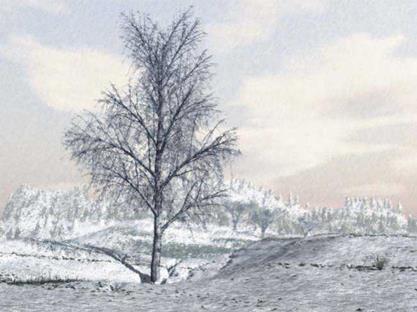

An additional advantage of nonphotorealistic rendering is the possibility of coordinating the illustration style with the image content, meaning that parts can be emphasized in correspondence to their importance. Also, different drawing styles allow for the production of totally different image expressions or mood settings for one and the same scene.

The first studies about on nonphotorealistic rendering originated from the time in which raster displays were introduced, and procedures were required to display geometric data on those output devices. Line drawings were used, and soon algorithms were developed to draw the lines in a way to enhance the geometric characteristics of objects especially well [6].

Later works focused on refining algorithms for line drawings [44, 55, 56, 57, 105]. Here the so-called “path-and-stroke”-metaphor is used, where a line to be drawn is rendered using a path that becomes the base for a possibly very broad line or a line that consist of a pattern. The actual line then imitates, for example, a brush stroke or a pencil line. Guo and Kunii [78] use this method to imitate Chinese brush drawings. Hsu und Lee [98] extend the approach by allowing the user to draw lines with arbitrary images as well as with recursive and fractal functions along the path.

|

For a simple form of nonphotorealistic renderings, image manipulations can be used. These work exclusively with image data without any other information. Most of the image-processing programs offer different filters that allow for the corresponding alienation of the images (see Fig. 11.1). Another important step is to include three-dimensional model information that is used to control the image production. Such information is usually available if images are generated within the framework of a rendering pipeline such as the one mentioned in Chap. 9.

![]() Saito and Takahashi [181] use such model information to modify the image rendering, and also to add important edges of the models into the produced images. The images adopt the appearance of drawings, and allow in an elegant way the merging of photoimaging and drawing. In order to analyze the edges, the method uses the already-mentioned depth buffers of the image, which are used by the graphics hardware during rendering. Since for each pixel the depth value is stored, mathematical operations can be executed on the depth buffer, such as the calculation of the spatial derivation of the depth function. If pixels with large derivations are colored, lines evolve that emphasize the important edges of the model. The results are then overlayed onto the realistic image in order to render it more expressively. The three-dimensional model information is here merged into the image through the application of the depth buffer. Lansdown and Schofield developed independently from Saito and Takahashi a similar approach, which they published as the “Piranesi”-system in [112]. However, the method is a half-interactive drawing program, and thus is appreciated by a different type of user, such as the artists or architects. The system is designed to change and artistically manipulate images; for example, twodimensional areas can be mixed with projections of three-dimensional models.

Saito and Takahashi [181] use such model information to modify the image rendering, and also to add important edges of the models into the produced images. The images adopt the appearance of drawings, and allow in an elegant way the merging of photoimaging and drawing. In order to analyze the edges, the method uses the already-mentioned depth buffers of the image, which are used by the graphics hardware during rendering. Since for each pixel the depth value is stored, mathematical operations can be executed on the depth buffer, such as the calculation of the spatial derivation of the depth function. If pixels with large derivations are colored, lines evolve that emphasize the important edges of the model. The results are then overlayed onto the realistic image in order to render it more expressively. The three-dimensional model information is here merged into the image through the application of the depth buffer. Lansdown and Schofield developed independently from Saito and Takahashi a similar approach, which they published as the “Piranesi”-system in [112]. However, the method is a half-interactive drawing program, and thus is appreciated by a different type of user, such as the artists or architects. The system is designed to change and artistically manipulate images; for example, twodimensional areas can be mixed with projections of three-dimensional models.

The article that most likely was the first one in computer graphics focusing on the illustrative rendering of plants was written in 1979 by Yessios [234]. The paper deals with computer-generated patterns for applications in architecture, such as tiled floor covers, walls, and also plants, which are generated as symbolic images (see Fig. 9.1d).

Alvy Ray Smith focused on fractals and formal plant descriptions [201]. And aside from other models he generated a tree that he called the “cartoon tree”. The branches of the cartoon tree display disks instead of leaf clusters. Reeves and Blau (see Sect. 4.5) implemented a similar form of representation of leaves and sets of leaves using small discs for the production of their (realistic) trees. Both works are important sources of inspiration for our method using so-called abstract drawing primitives, an approach that represents the foliage of trees and shrubs with simple forms. It will be described later in this chapter.

Another article in this context was authored by Sasada [186]. He uses tree sketches in an architectural environment. For the rendering of the trees, he used an approach developed by Aono and Kunii (see Sect. 4.3). The generated images are projected as textures onto billboards. Since the method of Aono and Kunii only renders branches, but not the leaves of the trees, the spectrum of image contents is limited.