![]() The term “occlusion culling” defines methods that exclude all the objects hidden by other objects before the actual image computation. In connection with local rendering methods, these objects are not even transferred to the graphics hardware. In connection with raytracing, these procedures are used to decrease the total complexity of the scene description, and to avoid unnecessary shadow computation.

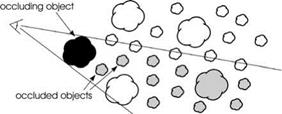

The term “occlusion culling” defines methods that exclude all the objects hidden by other objects before the actual image computation. In connection with local rendering methods, these objects are not even transferred to the graphics hardware. In connection with raytracing, these procedures are used to decrease the total complexity of the scene description, and to avoid unnecessary shadow computation.

In this case, the visibility of the light source is to be computed for the object points, and we must find out in the most efficient way whether or not one or more objects are in-between. If there are very many objects to be considered, occlusion culling can be helpful in decreasing this effort.

To execute the computation of the complete visibility information for every single point in a complex scene is much too time consuming. However, it is sufficient to compute the amount of the potentially visible triangles for a position or, better, for a subregion of a scene (a cell). While for the computation of every single position the depth buffer of the graphics hardware can be used – which executes the visibility test for each pixel, but has to be calculated separately for each image – this can be avoided when using cells.

![]()

Here for the cell volume all parts of the scene that are visible are determined (volume visibility). Just like with the bounding objects, in this procedure often a conservative approximation is performed: all objects that could be visible are drawn, and only those are discarded that are guaranteed to be occluded. However, this permits many objects to be classified as visible, although they are actually not visible from most places in the cell. Consequently, the efficiency of this approach is not optimal.

If the objects are large and convex polygons, efficient methods can be found to determine occluded objects (see [187]). Schaufler et al. [189] extend the method to general objects in arbitrary scenes. The scene in this case is divided hierarchically into cells (see Fig. 9.9a) that are either fully opaque or transparent. Looking from the cell of the virtual viewer, an object occludes a number of cells in the scene description and the corresponding objects do not need to be processed (see Fig. 9.11).

![]() For terrains different methods have been developed. Cohen-Or and Shaked [32], for example, delete parts of a terrain in a pixel-based method. Stewart [208] represents terrain using a quad tree with square cells, for which a horizon line is specified, and then defines together with the cell a set of areas from which

For terrains different methods have been developed. Cohen-Or and Shaked [32], for example, delete parts of a terrain in a pixel-based method. Stewart [208] represents terrain using a quad tree with square cells, for which a horizon line is specified, and then defines together with the cell a set of areas from which

the cell is not visible. The visibility of the scene is computed in a recursive approach that determines the visibility of all cells, and, if visible and if sufficiently detailed, divides them further.

A general problem with occlusion culling with regard to plants is the absence of complete occlusion. The leaves of the plants often only cover parts of the background, and thus make a conservative approximation of the occludee impossible. Satisfactory occlusion culling methods for the rendering of these kinds of scenes are still missing.

A general problem with occlusion culling with regard to plants is the absence of complete occlusion. The leaves of the plants often only cover parts of the background, and thus make a conservative approximation of the occludee impossible. Satisfactory occlusion culling methods for the rendering of these kinds of scenes are still missing.